I run a popular cloud security newsletter called AWS Security Digest, with about 7,000 subscribers. I enjoy writing for them, but it requires significant commitment. Every Monday, I dedicate most of my workday to it. Regardless of illness, fatigue, or holidays, it must be published by 8am ET. While rewarding and enjoyable, it can also be exhausting.

So I wanted to see if there was a way to get AI to do some magic and run a newsletter for me, instead of me, so I could be lazy and bask in the glory. This is my story.

TL:DR; It was tricky but it worked. Subscribe at bedrockbrief.com. You can make one too - here is the code.

What’s this terrible newsletter going to be about?

HEY! It’s not terrible. Yet.

Someone smart would probably start by taking the time to do some market research. Luckily I don’t have the burden of being smart so I asked ChatGPT to help.

I want to create an AI-generated newsletter focused on a topic related to AWS. My goal is to avoid competing with too many existing newsletters, while still choosing a subject broad enough to attract a sizable audience. Can you help me brainstorm ideas?

Some additional requirements:

* The data should be easily accessible (e.g., RSS feeds, documentation diffs, blogs).

* There must be enough change or new content each week to support a weekly cadence.

* The content generation process needs to be robust - AI hallucinations shouldn’t compromise the output.

It came up with a bunch of not-so-awful ideas. We laughed, we cried. After some back and forth, I settled on one we (Gippity and I) both thought had legs. [Narrator: newsletters do not have legs]

The details can be refined later once some draft issues start rolling in.

What even is a newsletter?

Newsletters sound simple, and they can be, but there’s a bit more to them than just content, especially if you want them to become big.

- Name/Domain - Every good side project starts with a domain that is registered and paid for but probably never used.

- Website - One could argue that a Substack account and some social media presence is better but I’m old fashioned. I’m BD (Before Dialup).

- Email delivery - There has to be a way to send the inspirational content

- Analytics - Eventually we’ll want to optimize and that requires understanding what’s working and what’s “pre-working”. That’s like pre-revenue for newsletter founders.

- Feedback - Your subscribers are a valuable resource for you to learn from and improve.

- Content - Oh yeh, and the stuff that people actually come for, the inspirational content.

- Growth engine - There has to be a way to get that next subscriber, but at scale. There are a number of techniques that just work.

The operational infrastructure of a newsletter was not that interesting for this project. I really wanted a single platform to do 1-4 for me.

I tried Substack first. It felt like the obvious choice until I started playing with content and realized that Substack doesn’t have usable content API. There are some reverse engineered libraries to do it but the ones I tried broke with recent Substack authentication changes and Cloudflare annoyance baked in. I guess they want people to write directly on their platform and not, you know, use AI to generate content.

Eventually I settled on Ghost (Pro).

Ghost is a powerful app for professional publishers to create, share, and grow a business around their content. It comes with modern tools to build a website, publish content, send newsletters & offer paid subscriptions to members.

It’s an open source product with paid SaaS version that includes a content API, which it intuitively called the Admin API. Anyway I paid many Australian dollars converted to freedom dollars for the platform, bought BedrockBrief.com as suggested by Gippity, and started configuring.

What content should be in the newsletter?

So the plan for content was to use the extremely sophisticated strategy of trial and error - find all the data sources that might be useful, and then try some experiments to turn them into content sections. Once I had bunch of worthy content candidates, I could turn them into an outline.

The What’s New blog

The AWS What’s New blog is perfect because it has a stream of announcements each week. Sometimes even hundreds of announcements. The actual content is sparse, usually 2-3 paragraphs for each announcements but there’s enough there for snappy bites that might interest people.

It was suggested by Gippity because it has an RSS feed thats trivial to parse. All that’s needed is a good prompt to bring it to life. Given the summaries are short, I figured one sentence bites of news were a perfect format for these announcements. This prompt produced results that made me not hate myself:

Write a one sentence summary of this news item. Make sure you include the most important techincal detail. Make it enticing to click and clear why someone should click. Ensure it’s clear why the reader should care without using marketing/corporate speak. Avoid jargon and make it understandable by an entry level developer. Avoid “now supports” and “has added” language. Do not sound like an AWS salesperson.

Here’s a summary Claude 3 Haiku made of one news item:

Amazon Bedrock Data Automation now processes popular file formats like Word docs and high-quality H.265 videos, making it easier to build efficient AI pipelines for analyzing text and video content.

Not bad. Except the feed includes all the announcements not just the AI ones, so I needed a way to find the right ones. I chose to look for presence of at least one AI related keyword but asking an LLM to decide could work as well.

# Check for AI service names from the global list

for service in AWS_AI_SERVICES:

if service.lower() in title_lower or service.lower() in desc_lower:

return True

# Check for specific AI-related keywords/phrases

ai_keywords = [

"artificial intelligence", "machine learning", "large language model",

"foundation model", "generative ai", "claude", " llm", "anomaly detection",

"natural language processing", "computer vision", "speech recognition",

"text-to-speech", "optical character recognition", "entity resolution"

]

for keyword in ai_keywords:

if keyword in title_lower or keyword in desc_lower:

return True

Having a few of these announcement towards the top of the newsletter should be great start.

AI/ML blog

The AWS AI/ML blog (and RSS feed) has a lot more depth than the announcements and the cadence of entries is very high for the depth of technical detail in the posts. It’s really quite impressive. So there’s a decision to be made - how much, if any, of this content should we include.

For this one, I’d like to pick the most “interesting” item and give the reader an insight into that, and let them choose if they would like to see any of the other items.

There’s no categorization problem like announcements since the AI/ML blog is restricted to the target subject matter. However, a method for picking the most interesting item is required.

AWS YouTube

Video content is much more engaging than text so it would be awesome to include. AWS produces a metric ton of video content. They have at least 3 YouTube channels: 1. AWS general 2. AWS Events 3. AWS Developers. They also have Twitch channel for podcasting and live tutorials.

The YouTube channels cover all sorts of topics, not just AI. This can be resolved the same way as the Whats New feed, just by scanning text for keywords. Except for one tiny detail, videos are not text.

The good news is YouTube has an undocumented captions API which when enumerated essentially provides a full transcript of the video without the need for transcription. To get access to YouTube data API, I had to start a google cloud project, enable the YouTube data API and then create an API key.

How to go from content outline to content agent?

Okay, we have a plan for what to put in the newsletter.

Now this is the fun bit - using AI to draft a newsletter issue. There are heaps of options to choose from for making this happen (no-code, low-code, all-code). I decided to use Python and AWS Bedrock Agents. I like Python and wanted to learn about AWS’ shiny agentic platform. Plus it would be weird to automate an ‘AI on AWS’ newsletter and then not use AI on AWS?!

I have a confession though. Several really. I’ll keep the personal ones to myself… for now. But the ones that should concern you are:

- An AI agent is probably not the best tool for newsletter generation. The process is pretty strict, well defined, and intended to produce consistent output issue to issue. There aren’t many decisions to be made and no autonomy is required. I don’t care. I just wanted to learn how Bedrock Agents work.

- I one-shot a lot of text output, against my own advice. Maybe Im just bad at computers but I find one-shot prompts produce much better results than re-running text through an LLM with an agent or otherwise. Those recycled outputs invariably change the text too much even with careful prompting. Someone please teach me the way.

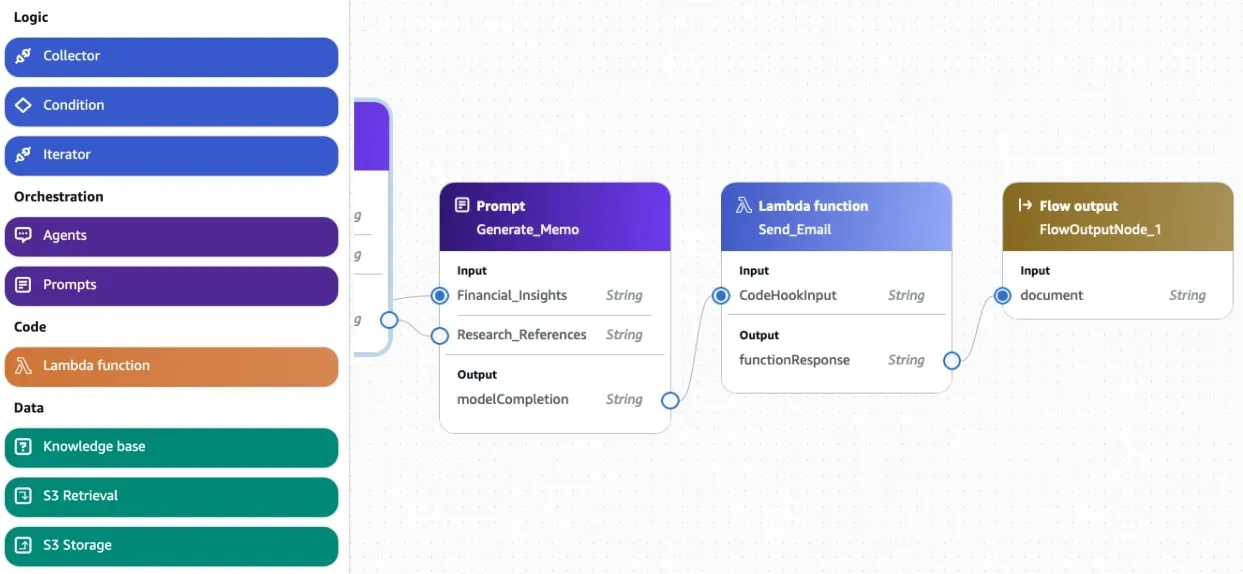

- I took an infrastructure approach to this and built everything in Python CDK which is sort antithetical to an agent development, and certainly an agent with a very defined process. I realized half way through it was a lot of unnecessary pain given the existence of Bedrock Flows, which is a visual interface for stitching together inputs, ouputs, databases, and agents. It would have been much faster to go that route. C’est la vie.

Confessions of stupidity aside, my approach to building this was to split it up into sections and write separate Python code to generate each. Once I was happy with a given section I could give the instructions to the agent to use.

Each section calls out to an LLM asking it to to pull out the most interesting topic or summarize things, or both.

In Bedrock models have “on-demand” inference mode or “provisioned” inference mode. On-demand is the classic cloud pay-as-you-go mode so I only used those, while provisioned requires a set spend. Here’s a little script I wrote to list them:

import boto3

client = boto3.client('bedrock', region_name='us-east-1')

response = client.list_foundation_models(

byInferenceType="ON_DEMAND"

)

models = response['modelSummaries']

print(f'Found {len(models)} available models supporting on-demand inference:')

for model in models:

print(f'- {model["modelId"]}')

I ultimately settled on anthropic.claude-3-5-sonnet-20240620-v1:0 as it produced the best output while being available on-demand in us-east-1.

Announcements (Fresh Cut)

Announcements needed to be one sentence with a link. I didn’t want to use the AWS announcement titles because they were dry and sounded like corporate drivel, without any sort of value proposition. Instead I downloaded each full announcement and passed it through this simple prompt:

user_prompt = f"""Write a one sentence summary of this news item.

Make sure you include the most important technical detail.

Make it enticing to click and clear why someone should click.

Ensure it's clear why the reader should care without using marketing/corporate speak.

Avoid jargon and make it understandable by an entry level developer.

Avoid "now supports" and "has added" language. Do not sound like an AWS salesperson.

Do not include any intro, explanation, or preamble.

Title: {title}

Description: {description}

"""

I then passed the outputs to a Jinja2 template that looks like so:

{% if announcements %}

{%- for announcement in announcements %}

- {{ announcement.content }} [Read announcement →]({{ announcement.url }})

{%- endfor %}

{%- else %}

*No announcements this week*

{%- endif %}

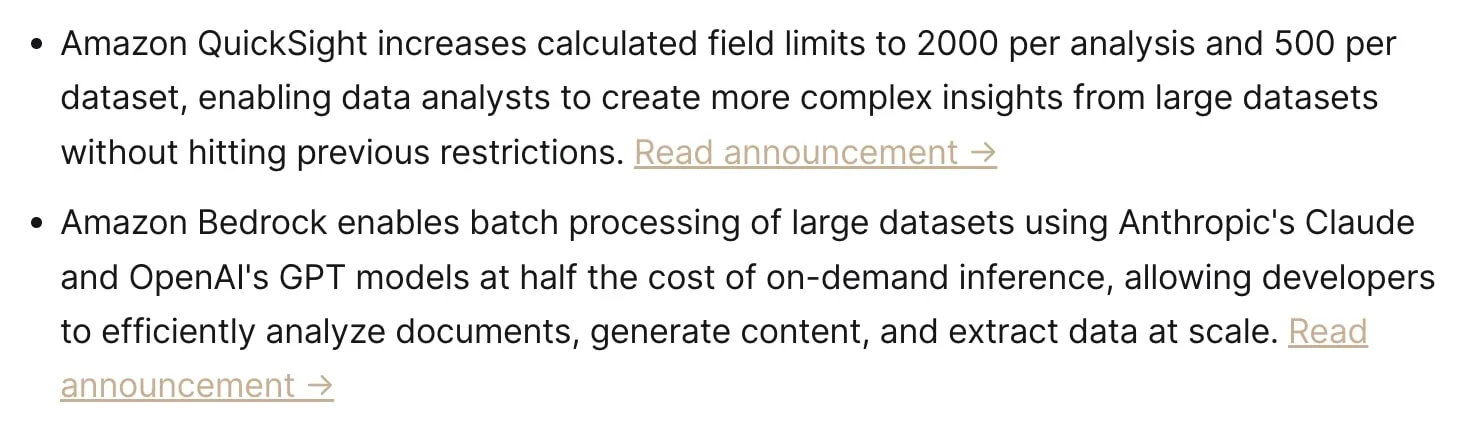

Producing an output like this:

I think we can all agree thats better than “Amazon QuickSight expands limits on calculated fields” or “Amazon Bedrock now supports Batch inference for Anthropic Claude Sonnet 4 and OpenAI GPT-OSS models”

Improvement idea: Sort the list by what’s most important to the most people. I’ve discussed prioritization approaches using AI before here.

Blogs (The Quarry)

You’d be surprised how many AI-related blog posts AWS can churn out in one week. Blog post titles are much better than announcement titles so all that’s needed is a way to pick the best post. It’s not perfect but the below prompt did a decent job. When I was tuning it I asked it for a full explanation so I could understand its reasoning and adjust different factors.

You are an expert content analyst specializing in AWS Machine Learning and AI technologies.

You have been given a list of recent blog posts from the AWS Machine Learning blog.

Please analyze the following {len(posts)} blog posts and determine which one is the BEST overall.

Consider the following criteria:

1. Technical depth and educational value

2. Innovation and cutting-edge content

3. Clarity and quality of writing

4. Exclusion of sales pitches

5. Uniqueness and originality of the content

6. Applicability to largest audience

Here are the posts to analyze:

{formatted_posts_str}

Please provide your analysis in the following JSON format:

{{

"best_post_number": <integer>

}}

The featured post is then summarized with this prompt:

prompt = f"""{BEDROCK_SYSTEM_PROMPT}

Based on the following AWS ML blog post, write a 3 sentence, 1 paragraph summary that captures the essence and value of the post.

Include at least one technical detail that an engineer would find interesting.

RETURN ONLY THE SUMMARY, NO OTHER TEXT.

Do not include any intro, explanation, or preamble.

Title: {best_post['title']}

Description: {best_post['description']}

"""

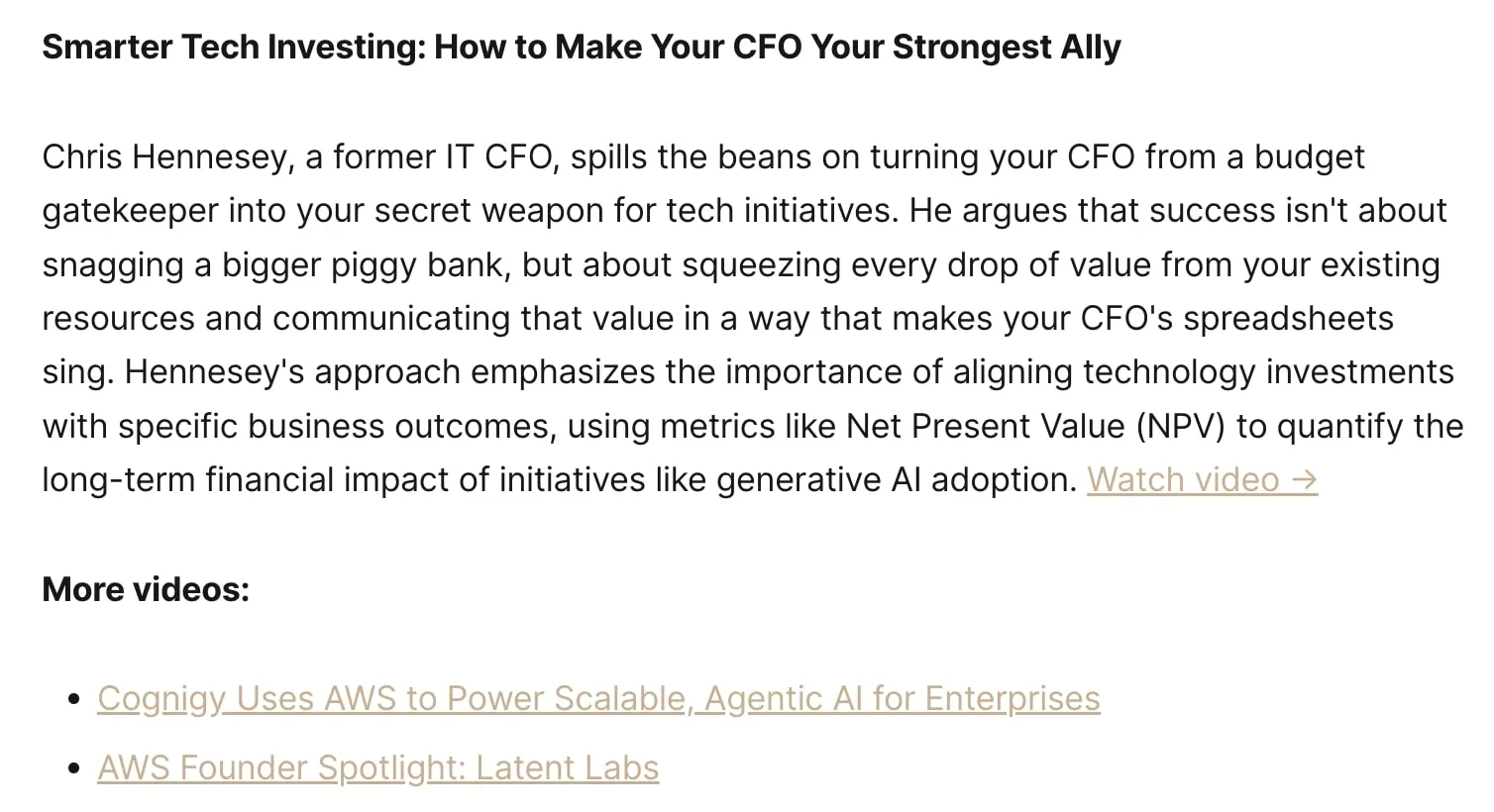

The BEDROCK_SYSTEM_PROMPT is a sort of style guide I like to use it to make LLM outputs more interesting and human. I built it by having Gippity read all of my past blog posts and synthesize a prompt reflecting my writing style. After some adjustments it does a reasonable job of being entertaining but informative, without sounding like a robot.

BEDROCK_SYSTEM_PROMPT = """You are an expert newsletter editor and author who writes with a blend of deep AI and AWS expertise, conversational humor, and a hint of irreverence. You favor relatable analogies, playful subheadings, and memorable one-liners that engage both newcomers and seasoned pros.

**Voice & Tone:**

- Friendly, curious, and lightly sarcastic

- Conversational yet authoritative—think "expert explaining complicated details over coffee"

- Sprinkled with humor and the occasional witty quip

- Not salesy or promotional

**Style & Structure:**

- Short paragraphs and clear, descriptive subheadings

- Occasional rhetorical questions to hook the reader ("Ever wonder why…?")

- Metaphors or quick anecdotes that simplify tough technical concepts

- Step-by-step or bulleted breakdowns of complex processes

- Call-to-action or concluding takeaway that ties it all up

**Technical Details:**

- Focus on AWS AI and machine learning

- Provide enough background to help readers follow along, but avoid fluff

- Show real-world insight, referencing real world examples, research findings, or subtle details in AWS services

**Attitude & Perspective:**

- A bit rebellious but always instructive

- Unafraid to highlight vendor or feature shortcomings with a playful nudge

- Encourage readers to be inquisitive and self-sufficient

**DO NOT:**

- DO NOT use em dashes

- DO NOT use corporate shill language like essential, leverage(s), unleash(es), turbocharge(s), streamline(s), etc.

- DO NOT start sentences with "in a [something] world"

"""

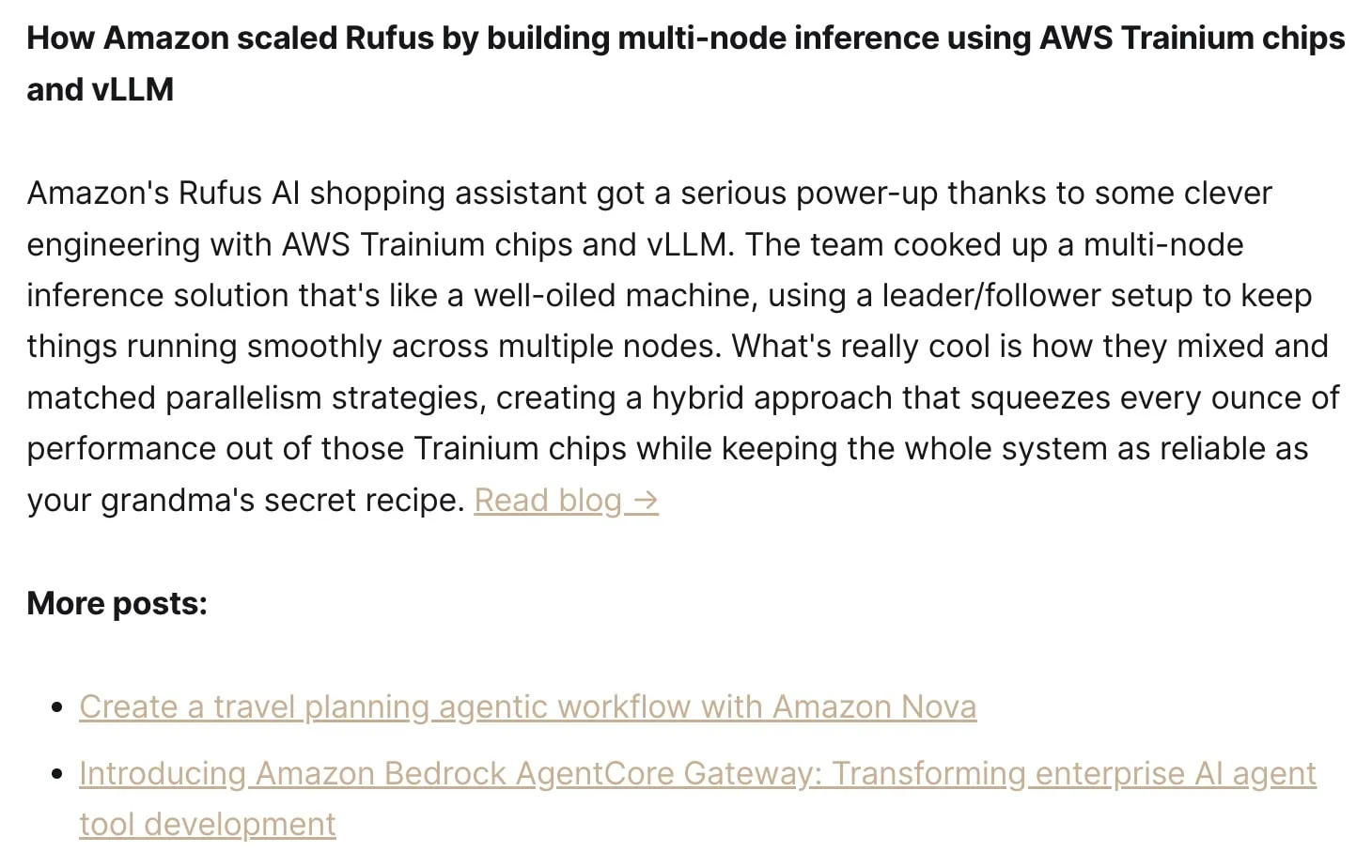

Here’s an example of the content it produces. What do you reckon?

YouTube videos (Core Sample)

This section is almost identical to blogs in terms of output and prompts. The only difference is the use of the transcript API to get text content from videos.

def fetch_transcript(video_id: str) -> str:

try:

ytt_api = YouTubeTranscriptApi()

transcript = ytt_api.fetch(video_id)

# Combine all transcript segments into one text

full_text = ' '.join([snippet.text for snippet in transcript])

return full_text

except Exception as e:

print(f"Error fetching transcript for {video_id}: {e}")

return ""

An example output:

Improvement idea: Add video thumbnails to make the content more visually exciting.

Introduction

I was happy with all the pieces of the newsletter. Now I just needed something compelling at the top to get and keep attention each week. I tried a few different things, including picking a theme from all the already generated content, but that was too unreliable based on just titles and snippets. The LLM took too many liberties. I also tried asking the model to talk about the sections themselves but that was too generic and repetitive.

Ultimately I settled on AWS AI news. I’m sure this won’t always be the case but for now there’s enough AI news at least tangentially related to AWS that the introduction can be filled purely with news.

The only thing is that in order to search Google News, you have to use a paid service because of the various limitations google imposes. I signed up for SearchApi.io to get search results, and use the newspaper3k library to download and parse the articles. It turns out parsing HTML for just the actual content is not trivial.

This worked well but still required tweaks. Many news sites block access from AWS and/or bot user agents so I excluded the most common of those so I could extract the full article text.

url = "https://www.searchapi.io/api/v1/search"

params = {

"engine": "google_news",

"q": "aws ai -site:amazon.com -site:wsj.com -site:bloomberg.com -site:youtube.com, -site:investors.com -site:reuters.com -site:crn.com",

"time_period": "last_week",

"num": num_results,

"api_key": api_key

}

And then passed all the relevant article content to this prompt:

full_prompt = f"""{BEDROCK_SYSTEM_PROMPT}

You are an AI assistant tasked with writing a 3-paragraph introduction for "The Bedrock Brief" newsletter, which is a weekly newsletter about AI on AWS.

Based on the following recent AWS AI news articles, write an engaging 3-paragraph introduction that:

1. Summarizes the key trends and developments in AWS AI from the past week

2. Highlights the most important news and their implications

3. Includes at least one markdown link to a news article

4. Doesn't assume anything about the contents of the rest of the newsletter

DO NOT ADD HEADINGS

RESPOND WITH ONLY THE INTRODUCTION CONTENT, NO OTHER TEXT. DO NOT INCLUDE ANYTHING ELSE LIKE "Here is a 3-paragraph introduction"

Do not include any intro, explanation, or preamble.

Articles:

{articles_content}

"""

Notice the extreme insistence on not adding headings or preamble. That’s because models would fairly regularly return something like this at the start of their response:

Here is a 3-paragraph introduction for “The Bedrock Brief” newsletter, summarizing key AWS AI news and developments from the past week:

I didn’t want to have to run it through yet another prompt to remove that kind of text, so I left it as is hoping newer models do better. They seem to.

Anyway, this prompt worked very well. It created really spicy and fun output. The model didn’t hold back on firing shots at everyone, especially AWS.

Having completed the text of the introduction, I needed to give it a representative feature image to bring the newsletter issue to life. I decided to use Amazon’s own on-demand image generation model, amazon.titan-image-generator-v2:0. Look, it’s not the best image generation model out there, and it’s not particularly close, but it does do a good enough job.

But before I could use it I needed a prompt, so I went full Inception and used another prompt to create the image prompt based on the introduction text.

prompt = f"""Create a single creative prompt for an AI image model (Amazon Titan Image Generator) based on the newsletter introduction below.

Requirements:

- Make it obvious how it relates to the introduction text

- Use thick paint brush strokes and watercolor painting style

- Include at least one rock element

- No text, lettering, watermarks, logos, trademarks, or brand names

- Do not depict specific products, UIs, or copyrighted characters

- Horizontal/wide aspect composition suitable for a feature banner

- Keep under 30 words.

Respond with ONLY the image prompt text, no quotes or extra narration.

Introduction:\n{introduction_text}\n"""

I’m still tweaking the styles but here’s an example of a prompt it came up with.

Abstract, text-free, no people or faces, no logos or trademarks, no brand names.Surreal watercolor landscape with floating rocks, swirling cloud formations, and a giant puzzle cube, using thick brushstrokes in vibrant colors

That resulted in this image:

There was a gotcha with image generation that is worth pointing out. There’s a content moderation layer applied to the model. It stops image data from being returned when triggered and is about as good at telling you why it did so as Apple is in telling you why you are no longer listed in the App Store. Here’s an example error:

Error generating or uploading feature image (JPEG only, no fallback): An error occurred (ValidationException) when calling the InvokeModel operation: Content in the all of the generated image(s) has been blocked and has been filtered from the response. Our system automatically blocked because the generated image(s) may conflict our AUP or AWS Responsible AI Policy.

I wasn’t trying to generate anything remotely sketchy and this was breaking my pipeline. I added a retry and pre-pended some guardrail instructions to hopefully make the image generation more reliable. 🤞

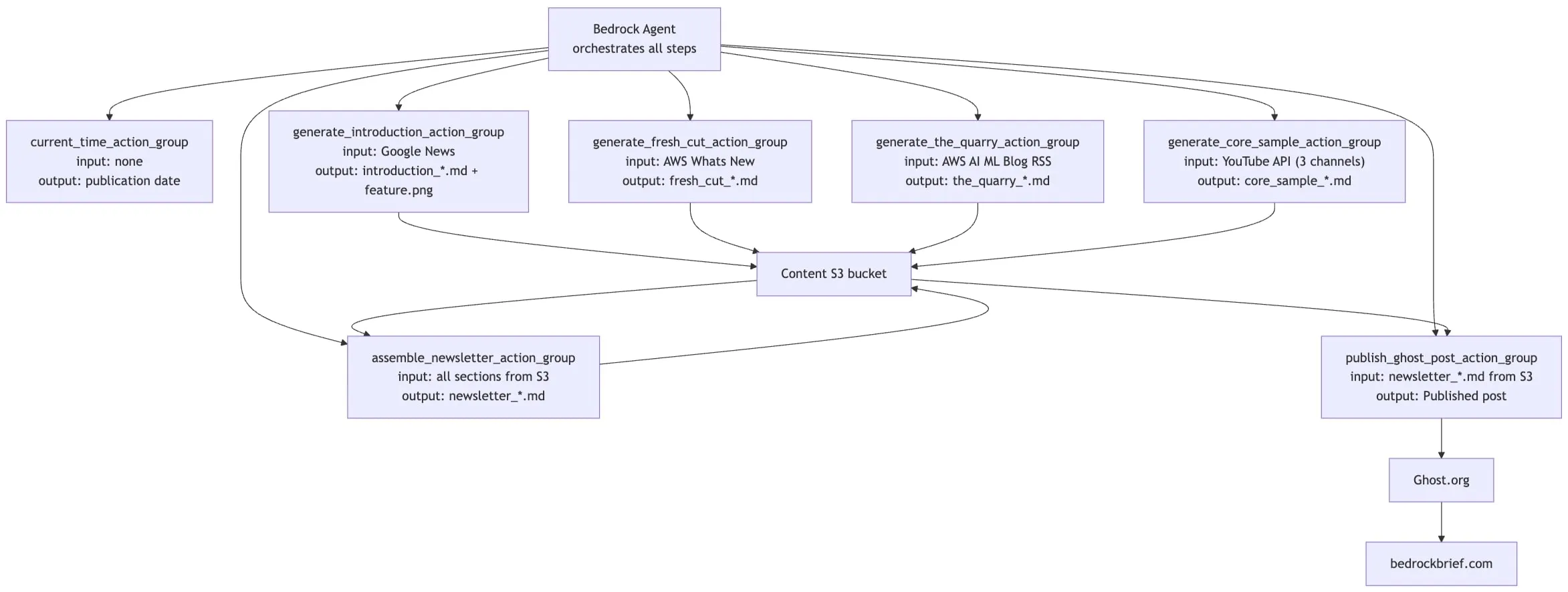

How to turn content into a newsletter issue?

Once I was happy with all the individual pieces of the newsletter looking like I wanted, I needed to stitch them together and publish the issue. That’s where the Bedrock Agent came into play. Again, it probably wasn’t the best choice for the task given how rigid everything was, but I made it work.

A Bedrock Agent has the following parts we care about:

- Instructions - Think of this as the prompt which describes the agent’s purpose, objective, and working directives.

- Action groups - These are the tools the agent can use to take action.

Here’s what my agent configuration looks like:

agent = bedrock.CfnAgent(

self, "BedrockBriefAgent",

agent_name="BedrockBriefAgent",

description="A Bedrock agent for the Bedrock Brief project",

instruction=f"""You are an expert author and editor of the Bedrock Brief

newsletter, dedicated to builders of artificial intelligence services on top of AWS.

- Generate all the newsletter sections

- Assemble the newsletter from the sections

- Publish the newsletter to Ghost.org, with the title "Bedrock Brief [pubication date as %d %b %Y]"

""",

foundation_model=BEDROCK_MODEL_ID,

action_groups=[

current_time_action_group,

generate_introduction_action_group,

generate_fresh_cut_action_group,

generate_the_quarry_action_group,

generate_core_sample_action_group,

assemble_newsletter_action_group,

publish_ghost_post_action_group

],

agent_resource_role_arn="", # Will be set by CDK

idle_session_ttl_in_seconds=3600, # 1 hour

orchestration_type="DEFAULT"

)

I chose to make each action group a Lambda function but they can also be APIs. Since the newsletter is broken into section there’s one function for each section. Each function has a description and parameters that the agent uses to decide when and how to execute it.

Here’s one how the generate_introduction action group is configured:

generate_introduction_action_group = bedrock.CfnAgent.AgentActionGroupProperty(

action_group_name="GenerateIntroductionActionGroup",

description="Action group for generating newsletter introduction",

action_group_executor=bedrock.CfnAgent.ActionGroupExecutorProperty(

lambda_=generate_introduction_arn

),

action_group_state="ENABLED",

function_schema=bedrock.CfnAgent.FunctionSchemaProperty(

functions=[

bedrock.CfnAgent.FunctionProperty(

name="generateIntroduction",

description="Generate the introduction section of the newsletter",

parameters={

"cutoff_date": {

"type": "string",

"description": "The cutoff date for the newsletter in YYYY-MM-DD HH:MM:SS format",

"required": True

}

}

)

]

)

)

Since a cutoff date (start of the issue week) is required the agent will also need to be able to get today’s date somehow, hence the reason for current_time_action_group.

Assemble

When I first started working on the agent, I had the agent doing content assembly. Each function would return it’s entire content and eventually the agent would stitch it all together. That failed miserably as I hit invisible limits in how much context the agent could hold. Eventually I settled on storing all the content in an S3 bucket and never sending it to the agent.

Assembling an issue is a single call to Jinja2 with this template:

{{ introduction_section }}

{{ fresh_cut_section }}

{{ the_quarry_section }}

{{ core_sample_section }}

Publish

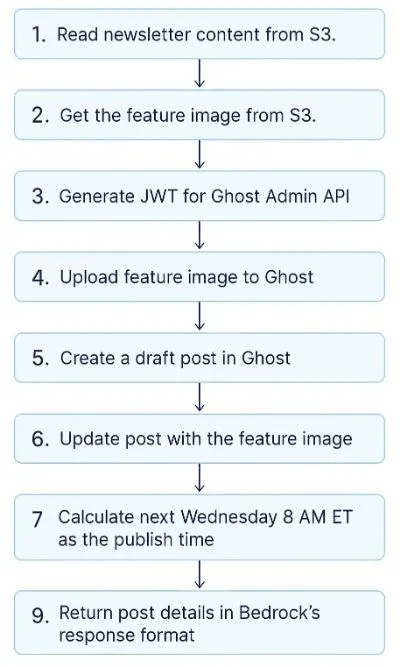

Perhaps the trickiest part of the newsletter process is publication, mainly because of quirks in the Ghost Admin API.

Here’s how it works:

Most of that is easy except setting the content. The Ghost documentation says it’s possible to provide HTML that will automatically be converted to its internal Lexical representation. That just didn’t work for me.

Luckily Ghost has a Markdown component which is great but I still had to provide the structure in Lexical, including the containing Markdown component. Here’s how I did it after reverse engineering existing post representations.

# Create lexical structure with markdown element

lexical_content = {

"root": {

"children": [

{

"children": [],

"direction": None,

"format": "",

"indent": 0,

"type": "paragraph",

"version": 1

}

],

"direction": None,

"format": "",

"indent": 0,

"type": "root",

"version": 1

}

}

# Add markdown element to the lexical structure

markdown_element = {

"type": "markdown",

"version": 1,

"markdown": content

}

# Insert markdown element as the first child

lexical_content["root"]["children"].insert(0, markdown_element)

Schedule weekly issue

As far as I could tell, there’s no way to run an agent on a schedule in a Bedrock native way. So I created a Lambda to do that every Tuesday 10pm ET.

In order to run a Bedrock Agent, it needs to first be “prepared”. Preparing is like building or compiling for agents. It takes a little while and requires a loop to poll until its state is changed to PREPARED.

That’s it. Those are all the peices to producing a single issue of the Bedrock Brief newsletter.

What now?

Well, you should subscribe to Bedrock Brief because it’s actually pretty damn awesome. Check out the past issues on the website. Tell your friends.

Try it for yourself!

Still to do

- I didn’t address the Analytics, Feedback, or Growth Engine parts of running a newsletter. Ghost does provide analytics and I think it’s totally reasonable to automate all of this stuff with Bedrock.

- There’s plenty more content left on the table. I mentioned Twitch. There are programmatically accessible feeds for IAM, CloudFormation, Reddit, and community content opportunities too.

Questions answered:

- Can you make a high quality newsletter without any human intervention?

Yes. Resounding yes. - Can you do it in Bedrock?

Yes, 100%. - Should you do it with Bedrock Agents?

Probably not. The process and outputs are just too rigid and well defined. Bedrock Flows might be a better alternative but the best option is probably just some step functions. - How “good” are Bedrock Agent related services?

They’re good enough. There are still some rough edges. Documentation is patchy or difficult to find. Using IaC to do it is painful relative to interfaces like Bedrock Flows and N8N. However the models available on Bedrock are great and when you have all your infrastructure already on AWS, its nice to not have to learn a new platform. - Would I do it again?

I think so. I’ve learned from the process and I’ll probably apply it to other niches. The question now is will real humans find the content useful enough to tell their friends.